The goal of sparse image-based rendering (IBR) is to synthesize novel views of a scene given images from a small set of cameras views. The sparseness constraint prevents the use of light-fields or similar techniques that require a relative dense sampling of the plenoptic function.

One approach to sparse IBR is to capture the scene geometry with a stereo rig, and then use this geometry (plus color information) to synthesize a new view. Stereo approaches, however, often fail for scene patches with little or no texture. Depth measurements are highly unreliable for objects with uniform color. But areas of uniform color are supposed to be rendered as such in the view synthesis stage. In other words, uniform patches are precisely the regions were incorrect depth values are less problematic during rendering. So, one may ask: can we achieve good rendering from sparse observations by exploiting this fact?

In the paper Sparse IBR Using Range Space Rendering (BMVC 2003) an approach dubbed range space matching is proposed (US patent 7,161,606). A sketch of the idea is the following (see the paper for details):

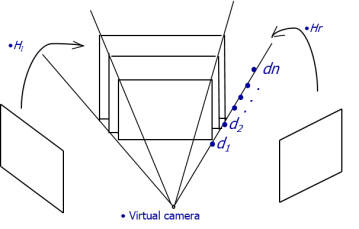

- 3-D range space is quantized into constant depth layers at each new viewpoint

- Warp the left and right image to each depth layer in the range space (pose estimation, rectification, re-sampling)

- Compute a match score for the layer using sum of square differences (SSD) or sum of absolute differences (SAD)

- Find a best layer for each light ray (pixel) based on the matching score (the winner takes all)

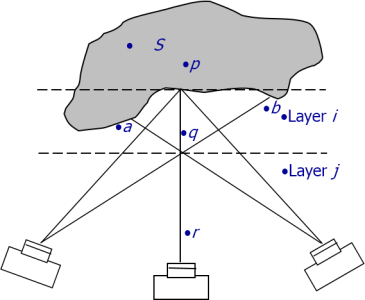

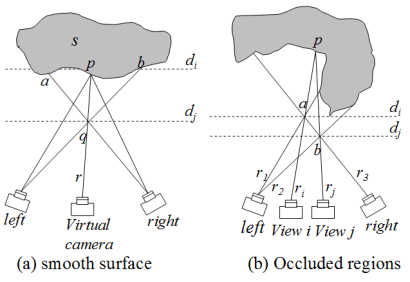

Note that that Step 1 is different from traditional stereo: the range space is first discretized into a fixed number of planes. The depth may not be correctly computed in this way. However, the rendering is good because the correct color is picked for each pixel:

Two problems still need to be addressed: Eliminate artifacts caused by repetitive patterns, and impose consistency in scene rendering.

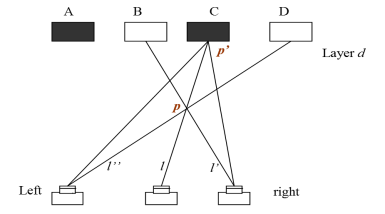

Repetitive patterns often cause the wrong color to be rendered because an incorrect match (point P below) is selected over the true match (point P’). To avoid this a coarse-to-fine matching strategy is used to mitigate the problem.

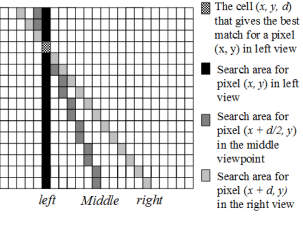

To impose scene consistency, for every pixel (x, y) at each new view, the corresponding depth information at other viewpoints is collected. A function LocalVote(x, y) is applied to these values to check scene consistency. This voting scheme is used to correct false matches and detect occlusions. Depth values in occluded areas can be filled using simple interpolation.

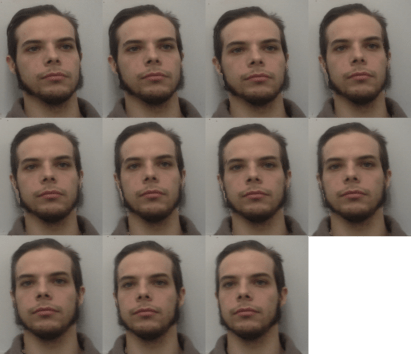

The produced results are quite good, and the procedure is indeed sparse in the number of camera views. This example shows reconstructed views from only two camera views (only the first and last faces are real photos):